The AI revolution has dramatically raised productivity expectations for software developers. To deliver higher value on accelerated timelines and remain competitive, engineers must adopt AI-native workflows that fully leverage coding agents. As AI tools become standard in daily development work, understanding which tools to use for each task is no longer optional—it's essential for career competitiveness and maximizing your impact.

Most developers hit a wall after initial GitHub Copilot setup—struggling with complex features, fragmented workflows, and constant context management. This guide goes beyond basic usage to show you how to properly configure Copilot, integrate MCP servers and tools, and establish spec-driven development workflows with smart context engineering. Learn the practices that separate casual AI users from true 100x AI-native engineers.

AI has raised the productivity bar. To stay competitive, developers must adopt AI-native workflows that turn coding agents from assistants into force multipliers

What is an AI Coding Agent ?

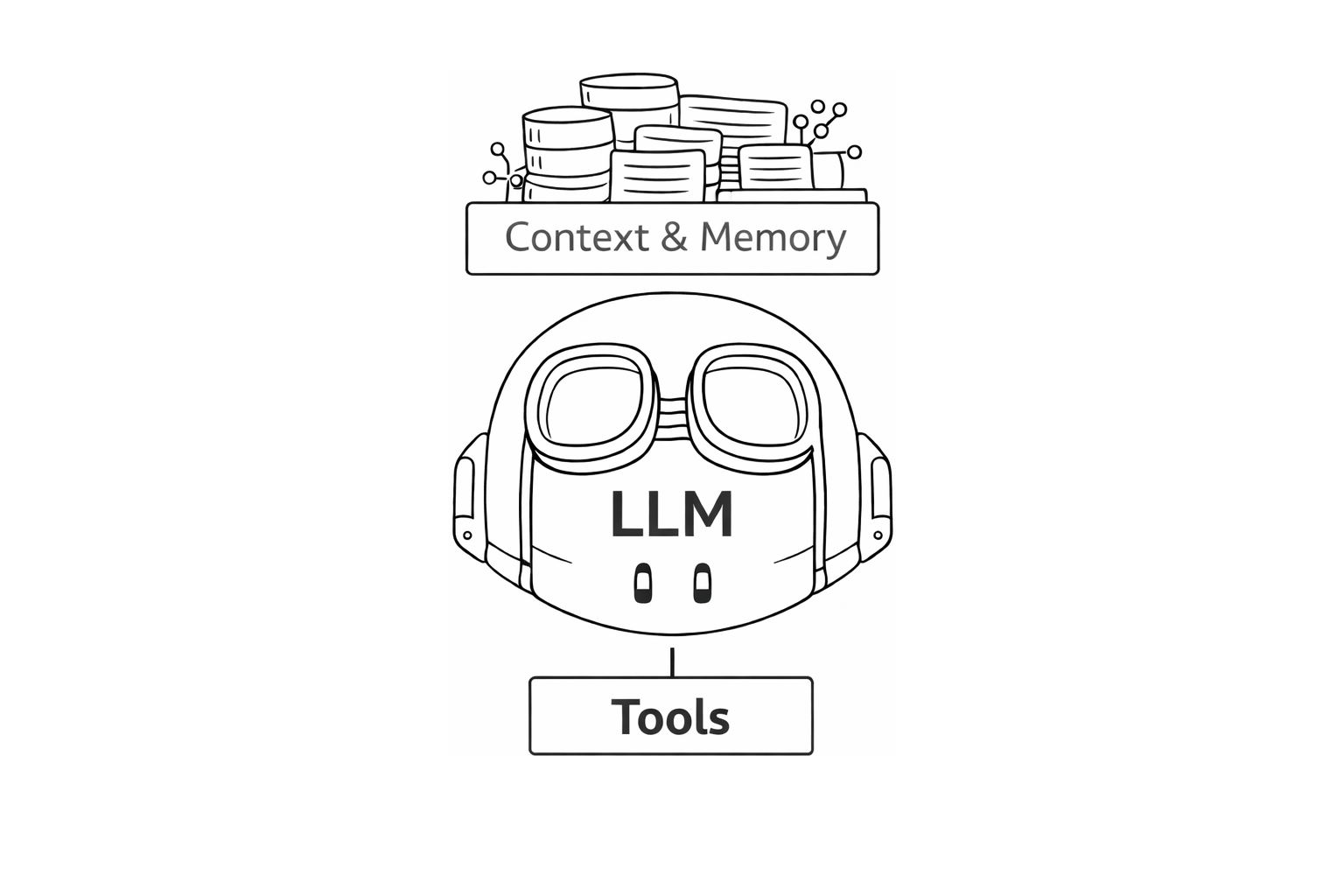

Effective AI coding agents are built on few foundational components that work together to transform them from simple autocomplete tools into intelligent development partners or pair-programmer.

1. LLM Selection (Intelligence)

Fast models for simple tasks, general-purpose for daily coding, reasoning models for complex problems

Lightweight models respond faster, advanced models analyze deeper

Vision models for UI work, agent mode for workflows, code-specialized for generation

Auto mode: Let the Copilot auto-select, override only for specific needs

2. Context Understanding (Memory)

Maintains "short-term" memory of current tasks and "long-term" memory of entire codebase structure

Access to relevant code, documentation, and project structure through indexing or RAG

Understanding of your codebase architecture and patterns

Awareness of dependencies, frameworks, and tech stack

3. Reasoning & Planning

Uses "Chain-of-Thought" to decompose tasks (e.g., "Add a login button") into sub-tasks (Frontend UI → API Route → Database Schema)

Understanding requirements and edge cases

Systematic planning before executing code changes

4. MCP & Tool Integration

Must have access to terminal, file system, and browser to execute real work

IDE/editor integration for seamless workflow

Connection to version control, APIs, and external services (MCP)

4. Iteration & Feedback Loop (Guardrails)

Uses linters, type-checkers, and unit tests to self-correct before showing results

Learning from errors and corrections through automated validation

Refining outputs based on test results and static analysis

Adapting to your coding style and preferences over time

←——TBD:—— DIAGRAM before and After workflow ———→

1. LLM Selection: Choosing the Right Intelligence for Your Task

Selecting the appropriate Large Language Model (LLM) is crucial for maximizing your AI coding agent's effectiveness. The right model can transform your agent from a basic autocomplete tool into an intelligent development partner. Think of LLM selection as choosing the right tool from your toolbox—different tasks demand different capabilities.

Understanding Model Categories

AI coding agents support multiple models across three main categories:

Fast Models (Speed-Optimized): Claude Haiku 4.5, Gemini 3 Flash—ideal for simple, repetitive tasks requiring quick responses like syntax fixes, utility functions, and rapid prototyping.

General-Purpose Models (Balanced): GPT-4.1, Claude Sonnet 4.5, GPT-5-Codex, Grok Code Fast 1—the workhorses for everyday development including feature implementation, code review, and documentation.

Reasoning Models (Quality-Optimized): GPT-5, Claude Opus 4.1, Gemini 2.5 Pro—designed for complex problems requiring deep analysis, architectural decisions, and multi-step reasoning.

Two Approaches to Model Selection

Start Fast, Upgrade If Needed: Begin with Claude Haiku 4.5 or similar fast models for quick iteration and lower development costs. Test thoroughly, then upgrade only if you hit capability gaps. Best for prototyping, high-volume tasks, and cost-sensitive applications.

Start Powerful, Optimize Later: Begin with Claude Sonnet 4.5 or GPT-5 for complex tasks where intelligence is paramount. Optimize prompts for these models, then consider downgrading as your workflow matures. Best for autonomous agents, complex reasoning, scientific analysis, and advanced coding.

Specialized Capabilities to Leverage

Agent Mode: Models like GPT-4.1, Claude Sonnet 4.5, and Gemini 3 Flash support multi-step workflows—breaking down tasks, executing code, reading error logs, and iterating until tests pass.

Vision Support: GPT-5 mini, Claude Sonnet 4, and Gemini 2.5 Pro can analyze screenshots, diagrams, and UI components—essential for frontend work and visual debugging.

Code Specialization: GPT-5-Codex and Grok Code Fast 1 excel at code generation, debugging, and repair across multiple languages.

Decision Framework

Match your task to the right model tier:

Simple/repetitive → Fast models

Daily development → General-purpose models

Complex/architectural → Reasoning models

UI/visual work → Vision-enabled models

Multi-step workflows → Agent-capable models

Use GitHub Copilot's Auto mode for general work, but override manually when you need specific capabilities like extended reasoning, vision analysis, or speed optimization.

Best Practices

Balance speed and quality: Fast models respond in 1-3 seconds; reasoning models take 5-15 seconds but deliver deeper analysis. Choose based on whether you need immediate feedback or thorough investigation.

Create benchmarks: Test models with your actual prompts and data. Compare accuracy, quality, and edge case handling to make informed decisions.

Iterate strategically: Don't over-optimize early. Start general, track what works, and refine your selection as you understand your workflow patterns.

2. Context Understanding (The Memory): Building Intelligence That Remembers

Context is the foundation of effective AI coding agents. Like human developers who build mental models of their codebase, AI agents need both immediate awareness of current tasks and deep understanding of the entire project ecosystem. Without proper context, even powerful LLMs produce generic, misaligned code that ignores architectural decisions.

For detailed guidance on context engineering principles and advanced patterns, read: Context Engineering: The Right Way to Vibe with AI Coding Agents

The Two Types of Memory

Short-Term Memory (Working Context) Your agent's immediate awareness—the current task, files open, recent conversation history, and active problems. This includes task descriptions, explicitly referenced code snippets, recent errors and logs, conversation history, and any images or URLs provided.

Long-Term Memory (Persistent Knowledge) Understanding of the entire codebase accumulated over time and persisted across sessions. This includes codebase structure (indexed via RAG), architecture documentation, coding standards, approved frameworks, project-specific patterns, and historical decisions.

How Context Gets Delivered

Retrieval-Augmented Generation (RAG): Intelligent search retrieves contextually relevant code. Production systems combine AST-based chunking, grep/file search, knowledge graphs, and re-ranking—retrieval quality, not volume, determines effectiveness.

Feature | GitHub Copilot | Claude Code | Cursor |

|---|---|---|---|

Configuration File |

|

|

|

Custom Instructions File |

|

|

|

Editor Integration: Agents automatically access open files, recently edited code, or @-mentioned references as signals of relevance.

Multi-Level Scoping: Context can be personal preferences, repository-wide standards, or folder-specific rules.

Context as a Finite Resource

More context doesn't mean better results. As context windows grow, models experience "context rot"—losing precision because transformer architectures require every token to attend to every other token, diluting focus quadratically. Effective context engineering is about precision, not volume.

LLMs resemble an operating system where the model is the CPU and the context window is RAM. Just as an OS manages memory, context engineering determines what enters the window.

Best Practices

Start small, expand deliberately: Begin with minimal, high-signal context. Keep core files concise since they're injected into every interaction.

Version control your context: Keep configuration files in Git for traceability and team alignment.

Keep context fresh: Clear context when switching tasks, compact for long sessions, and avoid unchecked growth.

Measure relevance: Track whether agents produce aligned code on first try—refine context based on results, not completeness.

The Goal

Proper context transforms agents from generic code generators into collaborators who understand your project's constraints, patterns, and intent—producing code that integrates cleanly and requires minimal correction.

3. Reasoning & Planning (The Brain): From Prompt to Executable Blueprint

The most common mistake with AI coding agents is jumping straight to implementation. Without a plan, agents generate speculative code that looks confident but ignores constraints, violates architecture, and requires extensive rework. The solution is systematic planning that externalizes reasoning before writing a single line of code.

For comprehensive guidance on planning workflows, read: Context Engineering: The Right Way to Vibe with AI Coding Agents

Research → Plan → Implement (RPI)

RPI is a proven pattern that enforces discipline in how context is created and consumed:

Research: Document what exists today—current architecture, existing APIs, relevant patterns, organizational constraints. This grounds the agent in reality and prevents hallucinations.

Plan: Design the change with clear phases and success criteria. Break work into steps, define acceptance criteria, capture architectural decisions, and identify risks. A good plan transforms "do the thing" into a concrete, reviewable blueprint.

Implement: Execute the plan step-by-step with verification. Follow the plan, validate assumptions, run tests, and adjust only when evidence requires it.

RPI moves teams from reactive prompting to predictable outcomes. Instead of coding immediately, agents produce plans that can be reviewed, refined, and executed with confidence.

Specification-Driven Development (SDD)

RPI is the gateway to Specification-Driven Development, where specifications become the primary artifact. Specs evolve into living PRDs, generate implementation plans, produce code and tests, and get updated based on production feedback—creating a continuous loop where pivots become regenerations, not rewrites.

Tools like GitHub Spec-Kit, Tessl and Kiro make it easy to implement RPI pattern in daily workflows.

Key principle: Don't just ask agents to code—ask them to think, plan, and structure their reasoning. Use prompts like "Think hard and produce a step-by-step plan before writing any code." Planning dramatically reduces rework and produces reviewable, maintainable results.

Tool Integration (The Hands): Extending Your Agent with MCP

AI coding agents become exponentially more powerful when they can interact with your entire development ecosystem. The Model Context Protocol (MCP) is an open-source standard that enables this connectivity, transforming your agent from an isolated code generator into an orchestrator of your complete toolchain.

What MCP Enables

With MCP integration, you can ask agents to execute cross-system workflows: "Implement the feature from JIRA-4521, create a PR on GitHub, and check Sentry for related production errors" or "Query our PostgreSQL database for inactive users and generate the reactivation email campaign."

Useful MCP Server Integrations

GitHub: Access repositories, issues, PRs, and actions (enabled by default in GitHub Copilot)

Sentry or the like: Monitor production errors, access stack traces, debug with full context

Databases (PostgreSQL, MySQL): Query for analytics, validate schemas, generate reports

Notion or the like: Access specs and documentation to keep implementation aligned

Cloud Platforms (Azure, AWS, Cloudflare): Manage infrastructure, access logs and metrics

Communication (Slack, Teams): Fetch requirements shared in channels, post updates

How MCP Works

MCP servers provide tools (functions), resources (data), and prompts (workflows). Agents discover available tools, execute them autonomously when relevant, and integrate results into decision-making—chaining multiple tool calls to complete complex tasks.

Best Practices

Start with read-only tools: Explicitly allowlist specific tools rather than enabling all ("tools": ["*"])

Secure credentials: Store API keys in environment variables or secret managers, never in config files

Scope access appropriately: Follow principle of least privilege for MCP server permissions

Test before production: Validate configurations with simple tasks first

MCP transforms coding agents into integrated team members who orchestrate your entire development stack—configure access thoughtfully and monitor usage for security.

I'll fetch these documentation pages to get accurate setup guidance for each platform.Based on the documentation I've reviewed, here's a streamlined overview with enhanced setup guidance:

Configuring MCP Servers Across AI Development Tools

Each platform offers distinct approaches to MCP server configuration, enabling developers to extend AI capabilities by connecting to external tools and data sources. GitHub Copilot integrates MCP through repository settings where administrators add JSON configurations specifying server details, authentication, and available tools—enterprise deployments can also leverage YAML-based custom agents. Claude Code provides a CLI-first workflow with straightforward commands for adding servers across different scopes (local, project, or user), while also supporting direct JSON file editing for complex configurations. Cursor combines flexibility with ease-of-use through GUI-based setup in settings, JSON file configuration at project or global levels, and marketplace integration for one-click installations with OAuth support.

Platform-Specific Setup:

GitHub Copilot: Navigate to Repository Settings → Code & automation → Copilot → Coding agent → MCP configuration. Add JSON configurations defining server type (local, HTTP, SSE), required tools, and authentication. Store secrets with

COPILOT_MCP_prefix in the repository's Copilot environment. Enterprise users can configure MCP in custom agent YAML frontmatter. Learn more →Claude Code: Use CLI commands for quick setup:

claude mcp add --transport [http|sse|stdio] <name> <url|command>. Specify scope with--scope [local|project|user](local is default). Set environment variables using--env KEY=valueflags. Manage withclaude mcp list,claude mcp get <name>, andclaude mcp remove <name>. Configurations store in~/.claude.json(user/local scope) or.mcp.json(project scope). Learn more →Cursor: Go to Cursor Settings → Features → MCP → Add New MCP Server. Configure via GUI or edit JSON files directly:

.cursor/mcp.json(project-specific) or~/.cursor/mcp.json(global). Supports stdio, SSE, and HTTP transports with OAuth authentication. Composer Agent automatically uses relevant MCP tools when enabled. Environment variables can be set for API authentication. Learn more →

MCP Servers for Development Stages

Overview

This guide provides practical examples of MCP (Model Context Protocol) servers that enhance each stage of the software development lifecycle: Design, Coding, Code Review, and Testing.

1. Design Stage: Mermaid Diagrams MCP Server

Purpose

For distributed systems developers, the Mermaid Diagrams MCP Server enables creation of architecture diagrams, sequence diagrams, flowcharts, and system designs directly within AI workflows. Ideal for documenting microservices architectures, data flows, and technical specifications.

Key Capabilities

Architecture Documentation: Generate sequence diagrams, component diagrams, and deployment views

System Design: Create flowcharts for distributed workflows and decision trees

Visual Communication: Convert natural language descriptions to diagrams

Version Control Friendly: Text-based diagrams that work with Git

Configuration Examples

GitHub Copilot Configuration

{

"mcpServers": {

"mermaid": {

"type": "local",

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-mermaid"],

"tools": ["create_diagram", "render_diagram"]

}

}

}

Claude Code Configuration

claude mcp add --transport stdio mermaid \

--scope project \

-- npx -y @modelcontextprotocol/server-mermaid

Cursor Configuration

{

"mcpServers": {

"mermaid": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-mermaid"]

}

}

}

Usage Example

Distributed System Architecture Design

Prompt: "Create a sequence diagram showing the authentication flow in our

microservices architecture: API Gateway → Auth Service → User Service → Database"

AI Agent Actions:

1. Calls create_diagram with type="sequence"

2. Generates Mermaid syntax:

sequenceDiagram

Client->>API Gateway: POST /login

API Gateway->>Auth Service: Validate credentials

Auth Service->>User Service: Get user data

User Service->>Database: Query user

Database-->>User Service: User record

User Service-->>Auth Service: User data

Auth Service-->>API Gateway: JWT token

API Gateway-->>Client: 200 OK + token

3. Renders visual diagram

2. Coding Stage: GitHub MCP Server

Purpose

The GitHub MCP Server provides deep repository context, enabling AI to understand project structure, dependencies, pull requests, issues, and code history without leaving the development environment.

Key Capabilities

Repository Context: Access file structure, README files, and documentation

Code Search: Find implementations, patterns, and dependencies across repositories

Pull Request Integration: Review PRs, fetch diffs, understand code changes

Issue Context: Link code to requirements and bug reports

Cross-Repository Analysis: Navigate dependencies in microservices architectures

Configuration Examples

GitHub Copilot Configuration

{

"mcpServers": {

"github": {

"type": "http",

"url": "https://api.githubcopilot.com/mcp",

"tools": ["*"],

"headers": {

"Authorization": "Bearer $COPILOT_MCP_GITHUB_PERSONAL_ACCESS_TOKEN",

"X-MCP-Toolsets": "repos,issues,pull_requests,code_security,web_search"

}

}

}

}

Setup: Create a fine-grained personal access token with read access to required repositories, then add as COPILOT_MCP_GITHUB_PERSONAL_ACCESS_TOKEN in Copilot environment.

Claude Code Configuration

# Built-in GitHub MCP - no additional setup required

# Uses repository-scoped token automatically

Cursor Configuration

{

"mcpServers": {

"github": {

"type": "http",

"url": "https://api.githubcopilot.com/mcp",

"headers": {

"Authorization": "Bearer YOUR_GITHUB_TOKEN",

"X-MCP-Toolsets": "repos,issues,pull_requests,code_security"

}

}

}

}

Usage Examples

Example 1: Cross-Service Implementation

Prompt: "How does the payment service handle refunds? Show me the implementation

and any related code in the order service."

AI Agent Actions:

1. Searches "payment-service" repo for refund-related code

2. Finds RefundProcessor.java and related test files

3. Searches "order-service" repo for refund references

4. Identifies OrderRefundHandler.java that calls payment service

5. Provides implementation overview with code snippets

6. Shows API contract between services

Example 2: Dependency Analysis

Prompt: "Which services depend on the user-auth library version 2.x?

I need to plan a migration to 3.x."

AI Agent Actions:

1. Searches across organization repositories for user-auth dependency

2. Identifies 12 services using version 2.x

3. Lists services with their current versions

4. Flags 2 services using deprecated authentication methods

5. Suggests migration order based on dependencies

2B. Coding Stage (Alternative): Azure MCP Server

Purpose

The Azure MCP Server provides seamless integration between AI development tools and Azure cloud resources, enabling developers to understand Azure-specific configurations, infrastructure, and services within their codebase. Particularly valuable for cloud-native and distributed systems development on Azure.

Key Capabilities

Azure Resource Discovery: Understand Azure resources deployed in your subscription

Infrastructure Context: Access Azure-specific files (Bicep, ARM templates, Azure CLI scripts)

Configuration Analysis: Parse and understand Azure service configurations

Cloud-Native Development: Get context about Azure services your application uses

Resource Relationships: Understand dependencies between Azure resources

Configuration Examples

GitHub Copilot Configuration

Prerequisites:

Clone your repository locally

Install Azure Developer CLI

Run

azd coding-agent configin the repository rootThis command automatically:

Creates

.github/workflows/copilot-setup-steps.ymlfor Azure authenticationAdds required secrets (

AZURE_CLIENT_ID,AZURE_TENANT_ID,AZURE_SUBSCRIPTION_ID)Configures OIDC authentication with Azure

MCP Configuration: Add to Repository Settings → Copilot → Coding agent → MCP configuration:

{

"mcpServers": {

"azure": {

"type": "local",

"command": "npx",

"args": [

"-y",

"@azure/mcp@latest",

"server",

"start"

],

"tools": ["*"]

}

}

}

Azure Authentication Workflow (copilot-setup-steps.yml):

on:

workflow_dispatch:

permissions:

id-token: write

contents: read

jobs:

copilot-setup-steps:

runs-on: ubuntu-latest

environment: copilot

steps:

- name: Azure login

uses: azure/login@v2

with:

client-id: ${{ secrets.AZURE_CLIENT_ID }}

tenant-id: ${{ secrets.AZURE_TENANT_ID }}

subscription-id: ${{ secrets.AZURE_SUBSCRIPTION_ID }}

Claude Code Configuration

# Add Azure MCP server

claude mcp add --transport stdio azure \

--scope project \

-- npx -y @azure/mcp@latest server start

# Requires Azure CLI authentication

az login

Manual JSON Configuration:

{

"mcpServers": {

"azure": {

"command": "npx",

"args": [

"-y",

"@azure/mcp@latest",

"server",

"start"

]

}

}

}

Cursor Configuration

{

"mcpServers": {

"azure": {

"command": "npx",

"args": [

"-y",

"@azure/mcp@latest",

"server",

"start"

]

}

}

}

Prerequisites:

Authenticate with Azure CLI:

az loginSet subscription:

az account set --subscription <subscription-id>

Usage Examples

Example 1: Understanding Azure Infrastructure

Prompt: "What Azure resources are deployed for our application?

Explain how they're connected and what each service does."

AI Agent Actions:

1. Scans repository for Azure configuration files (Bicep, ARM, Terraform)

2. Queries Azure subscription for deployed resources

3. Identifies:

- App Service (web-app-prod) hosting the API

- Azure SQL Database (sqldb-orders) for data storage

- Azure Key Vault (kv-secrets) for secrets management

- Application Insights (appi-monitoring) for observability

4. Explains resource relationships and data flow

5. Provides infrastructure diagram in Mermaid format

Example 2: Azure-Aware Code Generation

Prompt: "Add a new microservice that stores user preferences in Azure Cosmos DB.

Use our existing authentication pattern from Azure AD B2C."

AI Agent Actions:

1. Analyzes existing Azure infrastructure code

2. Identifies current Cosmos DB connection patterns

3. Finds Azure AD B2C authentication implementation

4. Generates new microservice with:

- Cosmos DB SDK initialization using existing patterns

- Azure AD B2C token validation middleware

- Connection string retrieval from Key Vault

- Application Insights instrumentation

5. Creates Bicep template for new Cosmos DB container

6. Updates infrastructure-as-code files

Benefits

Cloud-Native Context: AI understands your Azure architecture

Consistent Patterns: Reuse existing Azure service configurations

Infrastructure Awareness: Code generation respects deployed resources

Security Best Practices: Leverages Azure Key Vault and managed identities

DevOps Integration: Works with Azure DevOps and GitHub Actions

3. Code Review Stage: SonarQube MCP Server

Purpose

Integrates code quality and security analysis into development workflows, enabling pre-commit issue detection and continuous quality monitoring.

Key Capabilities

Static Analysis: Detect bugs, code smells, and technical debt

Security Scanning: Identify vulnerabilities and security hotspots

Quality Gates: Verify deployment readiness

Snippet Analysis: Analyze code before committing

Configuration Examples

GitHub Copilot Configuration

{

"mcpServers": {

"sonarqube": {

"type": "local",

"command": "docker",

"args": [

"run", "--rm", "-i",

"-e", "SONARQUBE_TOKEN",

"-e", "SONARQUBE_ORG",

"mcp/sonarqube"

],

"env": {

"SONARQUBE_TOKEN": "COPILOT_MCP_SONARQUBE_TOKEN",

"SONARQUBE_ORG": "your-organization"

},

"tools": ["analyze_code_snippet", "get_quality_gate_status"]

}

}

}

Claude Code Configuration

claude mcp add sonarqube \

--env SONARQUBE_TOKEN=your_token \

--env SONARQUBE_ORG=your-org \

--scope project \

-- docker run -i --rm -e SONARQUBE_TOKEN -e SONARQUBE_ORG mcp/sonarqube

Cursor Configuration

{

"mcpServers": {

"sonarqube": {

"command": "docker",

"args": [

"run", "-i", "--rm",

"-e", "SONARQUBE_TOKEN",

"-e", "SONARQUBE_ORG",

"mcp/sonarqube"

],

"env": {

"SONARQUBE_TOKEN": "your_token",

"SONARQUBE_ORG": "your-org"

}

}

}

}

Usage Examples

Example 1: Pre-Commit Security Scan

Prompt: "Analyze this authentication function for security issues:

def authenticate(username, password):

query = f"SELECT * FROM users WHERE user='{username}' AND pass='{password}'"

return db.execute(query)

"

AI Agent Actions:

1. Calls analyze_code_snippet

2. Identifies CRITICAL SQL injection vulnerability

3. Reports plaintext password storage issue

4. Provides secure implementation using parameterized queries and hashing

Example 2: Deployment Readiness Check

Prompt: "Can we deploy the payment-service to production? Check quality gates."

AI Agent Actions:

1. Calls get_quality_gate_status("payment-service")

2. Returns: PASSED

3. Summarizes metrics: 0 bugs, 0 vulnerabilities, 85% code coverage

4. Confirms deployment approval

4. Testing Stage: Filesystem MCP Server

Purpose

Provides direct file system access for test data management, test report analysis, and automated test artifact handling—essential for distributed systems testing with large datasets and log analysis.

Key Capabilities

Test Data Management: Read/write test fixtures, mock data, and configurations

Log Analysis: Parse test logs, performance metrics, and error traces

Report Processing: Access and analyze test results, coverage reports

Artifact Management: Handle screenshots, recordings, and test outputs

Configuration Examples

GitHub Copilot Configuration

{

"mcpServers": {

"filesystem": {

"type": "local",

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-filesystem",

"/workspace/tests",

"/workspace/test-reports"

],

"tools": ["read_file", "list_directory", "search_files"]

}

}

}

Claude Code Configuration

claude mcp add --transport stdio filesystem \

--scope project \

-- npx -y @modelcontextprotocol/server-filesystem /workspace/tests /workspace/reports

Cursor Configuration

{

"mcpServers": {

"filesystem": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-filesystem",

"/workspace/tests",

"/workspace/test-reports"

]

}

}

}

Usage Examples

Example 1: Test Failure Analysis

Prompt: "Analyze the failed integration tests from yesterday's run.

The logs are in /workspace/test-reports/2025-01-02/"

AI Agent Actions:

1. Calls list_directory("/workspace/test-reports/2025-01-02/")

2. Identifies failed test logs: integration-test-failures.log

3. Calls read_file to analyze error patterns

4. Finds 5 failures related to database connection timeouts

5. Identifies root cause: connection pool exhaustion

6. Suggests configuration adjustments

Example 2: Test Data Setup

Prompt: "Create test fixtures for a distributed transaction test across

order, payment, and inventory services."

AI Agent Actions:

1. Generates mock data files with consistent IDs

2. Creates order-service-data.json with test orders

3. Creates payment-service-data.json with payment methods

4. Creates inventory-service-data.json with stock levels

5. Ensures referential integrity across services

6. Writes files to /workspace/tests/fixtures/distributed-tx/

Comparison Matrix

Stage | MCP Server | Primary Use Case | Key Benefit | Setup Complexity |

|---|---|---|---|---|

Design | Mermaid | Architecture diagrams | Visual system documentation | Low |

Coding | GitHub | Repository context | Cross-service code understanding | Low |

Coding | Azure | Cloud infrastructure context | Azure-aware code generation | Medium |

Code Review | SonarQube | Quality & security | Pre-commit issue detection | Medium |

Testing | Filesystem | Test data & logs | Efficient test artifact management | Low |

End-to-End Workflow Example

Scenario: Adding Rate Limiting to API Gateway

1. Design Phase

Developer: "Create a sequence diagram showing how rate limiting will work

between API Gateway, Redis, and backend services."

Mermaid MCP: Generates diagram showing request flow with rate limit checks

2. Coding Phase - Option A: GitHub Context

Developer: "Show me how other services implement rate limiting.

Check the auth-service and user-service repositories."

GitHub MCP: Finds RateLimiter.java in auth-service

Shows Redis integration pattern and configuration

2. Coding Phase - Option B: Azure Context

Developer: "Implement rate limiting using Azure Redis Cache.

Use our existing Azure infrastructure patterns."

Azure MCP: Analyzes deployed Azure Redis Cache instance

Provides connection string retrieval from Key Vault

Generates code using existing Azure SDK patterns

3. Code Review Phase

Developer: "Analyze my rate limiter implementation for security and performance issues."

SonarQube MCP: Identifies race condition in counter increment

Flags potential Redis key collision issue

Suggests atomic increment operations

4. Testing Phase

Developer: "Run load tests and analyze the results in /workspace/test-reports/load-test/"

Filesystem MCP: Reads test results, analyzes response time distributions

Identifies 99th percentile latency spike at 1000 req/s

Recommends connection pool tuning

Best Practices

Security

Use environment variables for all API tokens

Apply minimal permission scopes to tokens

Rotate credentials regularly

Never commit secrets to configuration files

Performance

Enable only required tools per server

Use project scope for team-shared configurations

Use user scope for personal tools

Configure appropriate timeouts for network operations

Team Adoption

Start with one server (e.g., GitHub for coding)

Document common prompts and workflows

Share configuration templates

Provide prompt engineering guidelines

Conclusion

Strategic deployment of MCP servers creates an integrated development environment that:

Reduces context switching by 60%+ through IDE-native tool access

Improves code quality via continuous analysis and feedback

Accelerates development with automated context retrieval

Enhances collaboration through shared, version-controlled configurations

For distributed systems development, these servers are particularly valuable for managing complexity across multiple services, repositories, and deployment environments.

Get Started Today

Begin your MCP integration journey by selecting one server that addresses your team's biggest pain point. If context switching between repositories slows your team, start with the GitHub MCP Server. For cloud-native teams on Azure, the Azure MCP Server provides immediate infrastructure awareness. Choose the tool that solves your most pressing workflow bottleneck, configure it following the examples above, and measure the impact over two weeks.

Once you've validated the value with one server, expand systematically across your development lifecycle. Share configuration templates with your team, document effective prompts specific to your codebase, and establish governance policies for enterprise deployments. The cumulative effect of MCP servers at each stage creates exponential productivity gains—teams report 40-60% reduction in manual tool navigation and 30% faster feature delivery after full adoption. Start small, prove value, then scale across your organization.

-Sanjai Ganesh